Whoops. I posted to mozilla.dev.apps.thunderbird about an updated version of logsploder at the end of 2009, but forgot to blog about it. I do so now (briefly) for my own retrospective interest and those who like cropped screenshots.

The gloda (global database) tests and various Thunderbird mozmill tests have been augmented for some time (1+ years) to support rich logging where handlers are given the chance to extract the salient attributes of objects and convert them to JSON for marshaling. Additionally, when the fancy logging mode is enabled, additional loggers are registered (like Thunderbird’s nsIMsgFolderListener) to provide additional context for what is going on around testing operations.

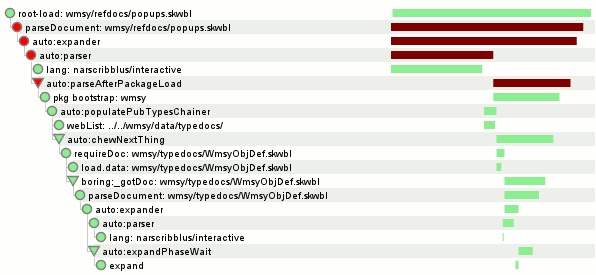

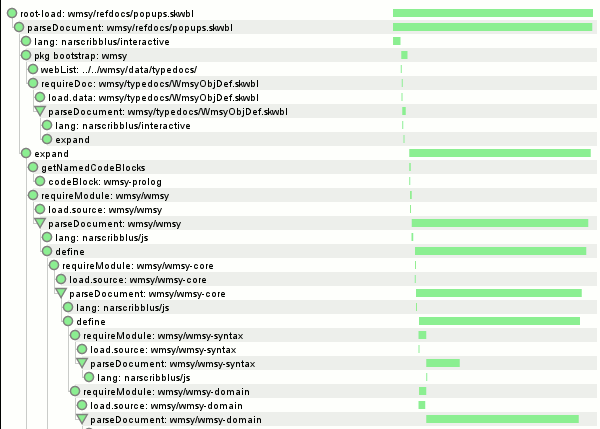

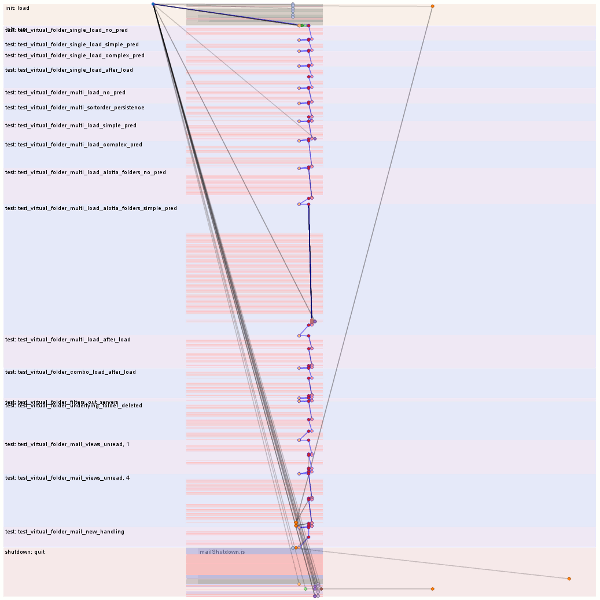

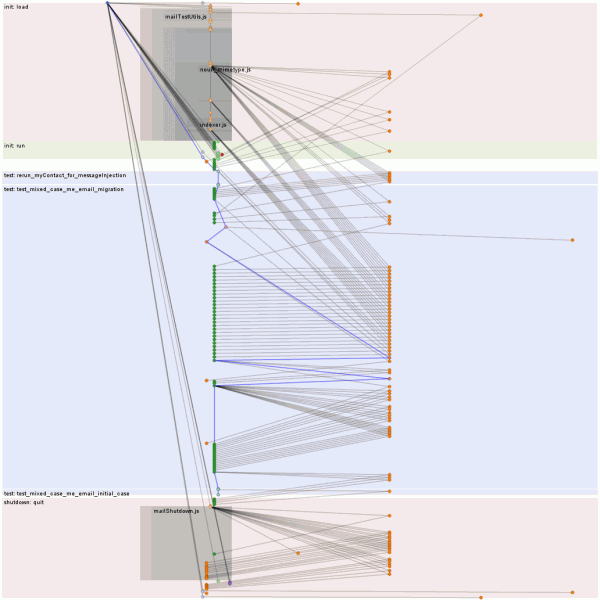

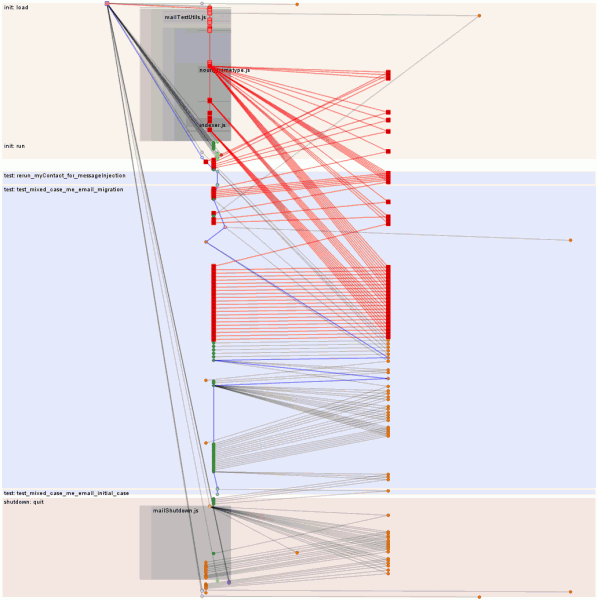

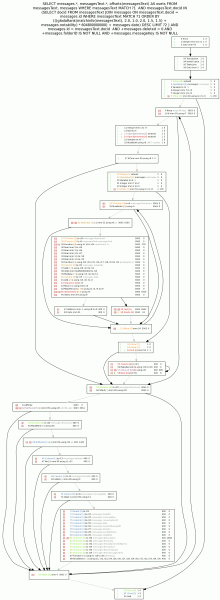

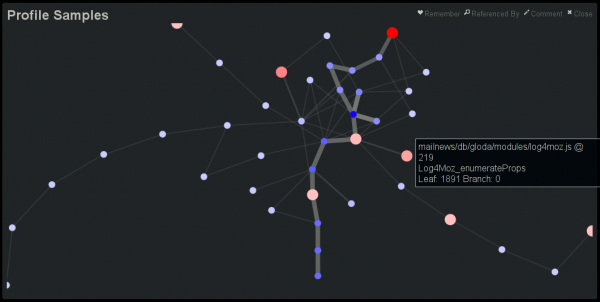

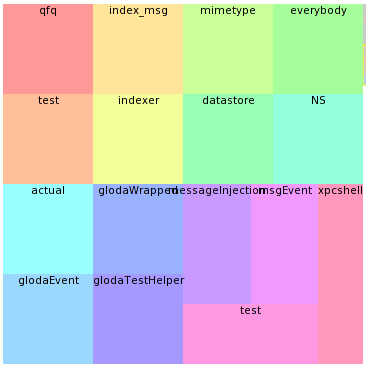

For a given test (file) run, logsploder provides an overview in the form of a hierarchical treemap that identifies the logger categories used in the test and a small multiples timeline display that characterizes the volume of messages logged to each category in a given time slice using the treemap’s layout. The idea is that you can see at a glance the subsystems active for each time-slice.

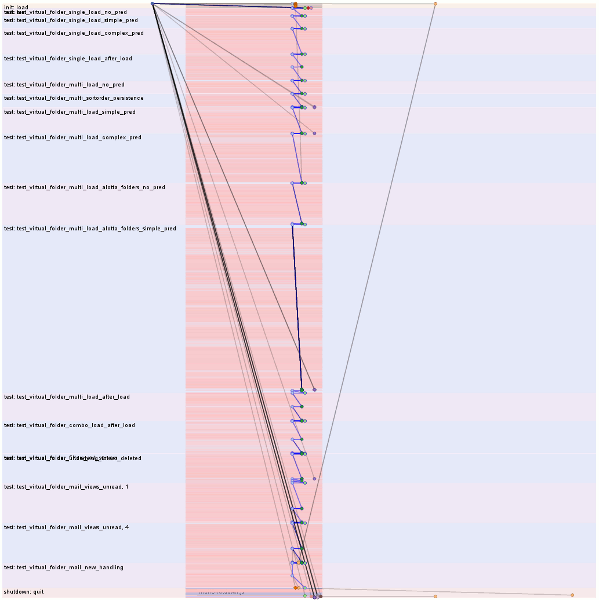

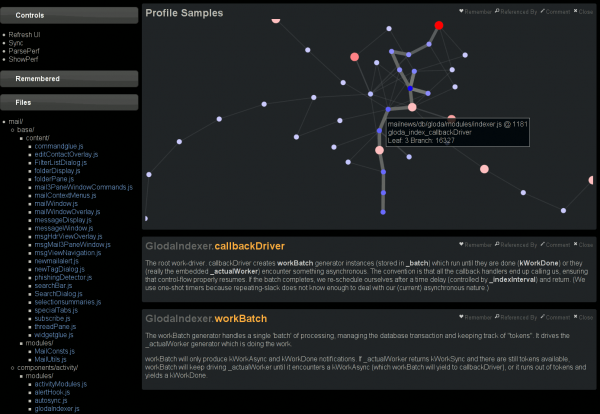

Logsploder can retain multiple test file runs in memory:

And knows the tests and sub-tests run in each test file (xpcshell) run. Tests/sub-tests are not green/red coded because xpcshell tests give up as soon as the first failure is encountered so there is no real point:

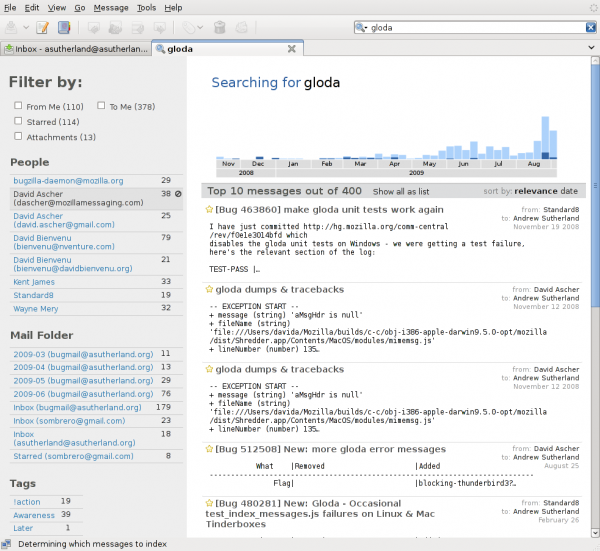

Clicking on a test/subtest automatically selects the first time slice during which it was active.

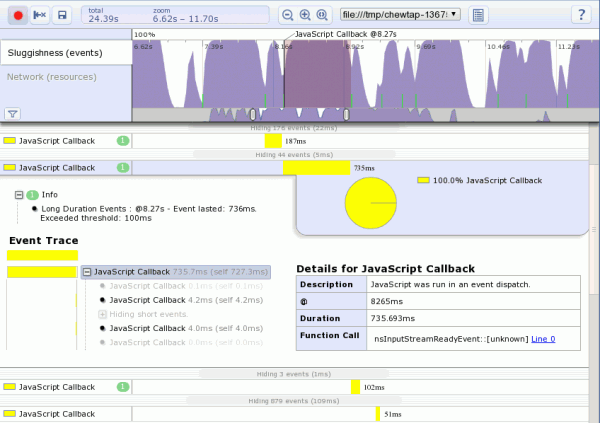

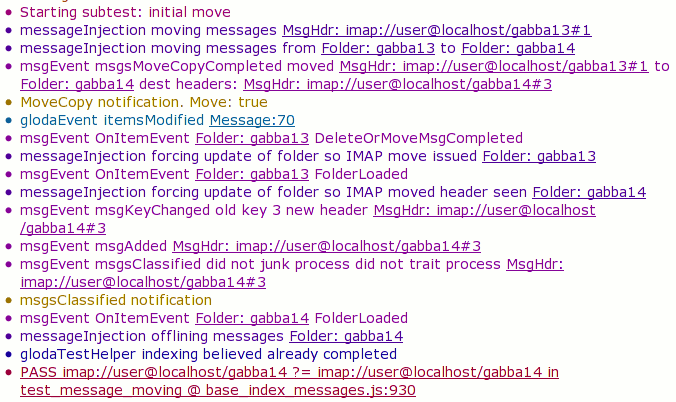

Selecting a time-slice presents us with a simple list of the events that occurred during that time slice. Each event is colored (although I think darkened?) based on its logging category:

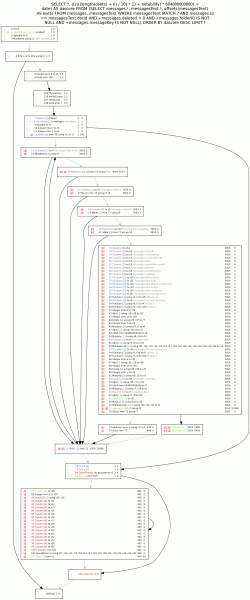

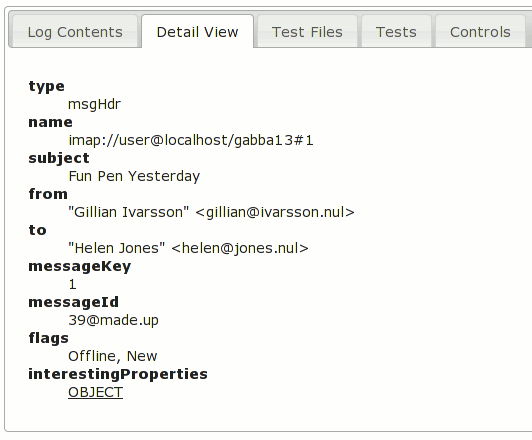

Underlined things are rich object representations that can be clicked on to show additional details. For example, if we click on the very first underlined thing, “MsgHdr: imap://user@localhost/gabba13#1” entry, we get:

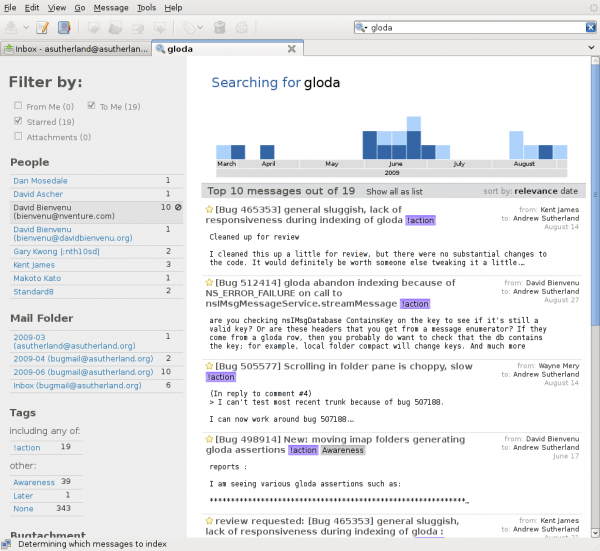

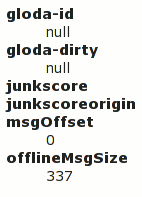

And if we click on the interestingProperties OBJECT:

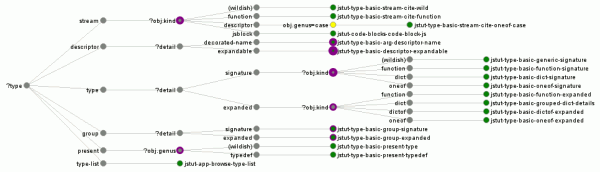

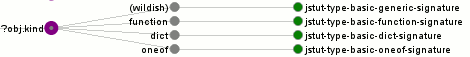

Logsploder was a pre-wmsy (the widget framework that I’ll be talking about more starting a few weeks from now) tool whose UI implementation informed wmsy. Which is to say, the need to switch to a different tab or click on the “OBJECT” rather than supporting some more clever form of popups and/or inline nesting was a presentation limitation that would not happen today. (More significantly, the log events would not be paged based on time slice with wmsy, although that limitation is not as obvious from the way I’ve presented the screenshots.)

If anyone in the Thunderbird world is interested in giving logsploder a spin for themselves, the hg repo is here. I also have various patches that I can cleanup/make available in my patch queue to support logging xpcshell output to disk (rather than just the network), hooking up the logHelper mechanism so it reports data to mozmill over jsbridge, and (likely heavily bit-rotted) mozmill patches to report those JSON blobs to a CouchDB server (along with screenshots taken when failures occur). The latter stuff never hit the repo because of the previously mentioned lack of a couchdb instance to actually push the results to. Much of the logHelper/CouchDB work will likely find new life as we move forward with a wmsy-fied Thunderbird Air experiment.

Many thanks to sid0 who has continued to improve logHelper, if sometimes only to stop it from throwing exceptions on newly logged things that its heuristics fatally did not understand 🙂