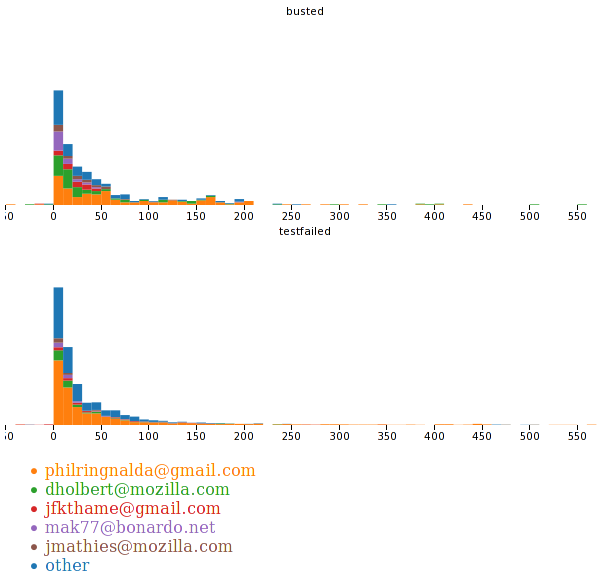

mstange‘s Tinderboxpushlog is awesome. You know it, I know it, the many people whose sanity has been saved by it know it. It is a fantastic improvement on checking the tinderbox; it lets you know the current state of the tree, the recent history of the tree, and how these things are correlated with recent commits. What it is not good at (nor intended for) is to be a historical record. Tinderboxpushlog ‘scrapes’ the tinderbox on-demand using tinderbox time windows and does not have the ability to key off anything but the time.

So if one refactored the scraper into a CommonJS module suitable for use with the newly rebooted jetpack, hooked it up to a cron job, and crammed its outputinto a CouchDB database, what would you get?

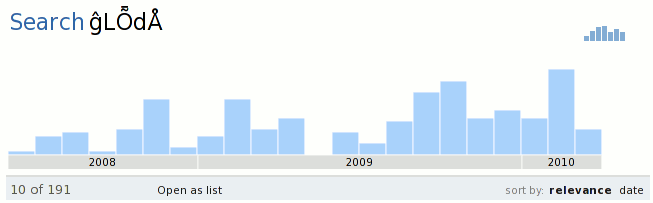

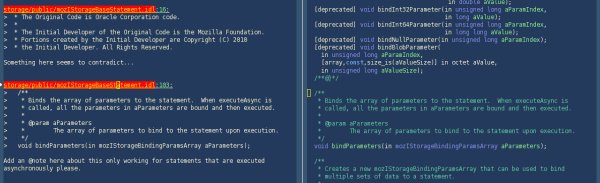

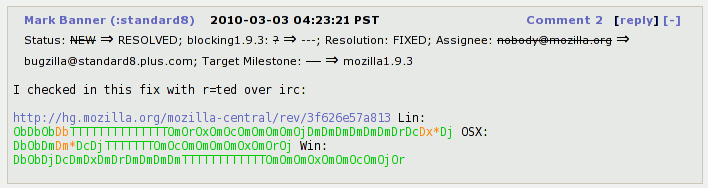

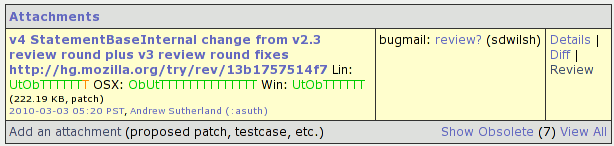

Exactly, something you can hook up to johnath and ehsan‘s magic bugzilla jetpack (last mentioned on the blog here). You can install it from here. (johnath/ehsan, please feel free to pull the changes into the upstream repo; I’m still wary of randomly pushing things into other people’s user repos…). I know the presentation is ugly, not the least because the text labels are inconsistent with tinderboxpushlog; feel free to push into my user repo with improvements. Oh, and for this to work you need to put an honest-to-goodness URL in your bugzilla comment or attachment description; there is no all-powerful regex if you type things out by hand.

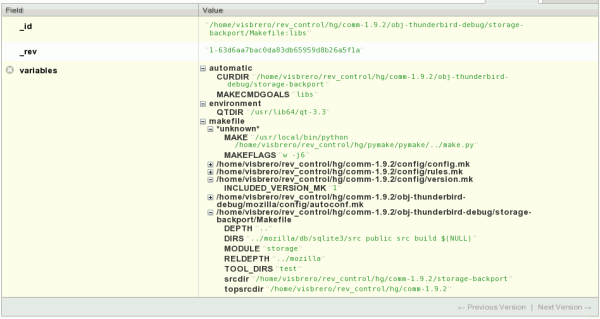

You can find the thing that pushes thing into the couch using refactored tinderboxpushlog logic here. Some cuddlefish runner tweaks (not all of which are likely advisable) can be found in my user jetpack-sdk repo. (The new jetpack reboot is wicked awesome, by the way.)

Right now the cron job is running against the MozillaTry, Firefox, and Thunderbird3.1 trees on the tinderbox every 5 minutes. While it should be pretty easy for me to keep the cron job and couch server online, I make no guarantees about the schema used in the couch, just that I will keep the jetpack in sync with it. And if the service starts exceeding the resources of my (personal) linode, I may have to tweak polling rates or what not (‘what not’ meaning ‘up to and including turning it off’).

There is other work happening in this area and I am excited about that. For example, I think brasstacks has an encompassing vision that should help provide historical data about which tests failed, etc. With any luck, my efforts here will be mooted by buildbot and magic awesome dust.

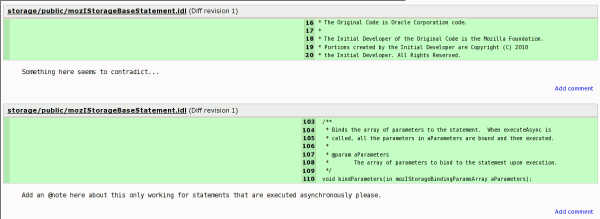

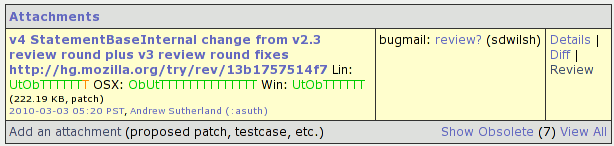

The mention of reviewboard in the title is just that if you are using my review board stuff and put a link to your try server commit in the attachment description, we will use that to pull the official hg changeset as the basis for the diff. The main benefit of this is that if your patch depends on other patches in your queue that are not yet in the trunk, the diff will still work out. Specifically, if your queue had A, B, and C applied (where C is the tip) and you link to C, then we will provide a diff of C relative to B. Please be aware that hg.mozilla.org is rather slow about providing the hg changeset diffs on demand so this will be at least an order of magnitude slower than the fetch of an already-available patch from bugzilla. Repo with changes is here.