For the Thunderbird 3.1 release cycle we are not just fixing UX problems but also resolving various performance issues. Building on my previous work on a visualization of SQLite opcode control flow graphs using graphviz I give you… the same thing! But updated to more recent versions of SQLite and integrating performance information retrieved through the use of systemtap with utrace.

For the Thunderbird 3.1 release cycle we are not just fixing UX problems but also resolving various performance issues. Building on my previous work on a visualization of SQLite opcode control flow graphs using graphviz I give you… the same thing! But updated to more recent versions of SQLite and integrating performance information retrieved through the use of systemtap with utrace.

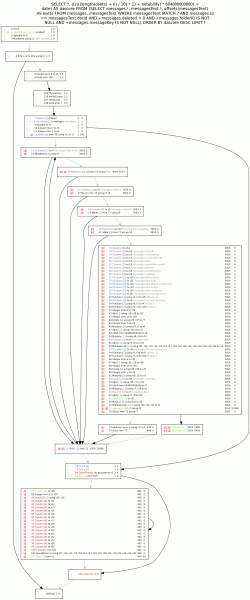

In this case we are using systemtap to extract the number of times each opcode is executed and the number of btree pages that are requested during the course of executing the opcode. (We do not differentiate between cache hits and misses because we are looking at big-O right now.) Because virtual tables (like those used by FTS3) result in nested SQLite queries and we do not care about analyzing the queries used by FTS3, we ignore nested calls and attribute all btree page accesses to the top-level opcode under execution.

Because the utility of a tool is just as dependent on ease of use as its feature set, I’ve cleaned things up and made it much easier to get information out of Thunderbird/gloda with this patch which should land soon and provides the following:

- The gloda datastore will write a JSON file with the EXPLAINed results of all its SQL queries to the path found in the preference mailnews.database.global.datastore.explainToPath. This preference is observed so that setting it during runtime will cause it to create the file and begin explaining all subequently created queries. Clearing/changing the preference closes out the current file and potentially opens a new one.

- Gloda unit tests will automatically set the preference to the value of the environment variable GLODA_DATASTORE_EXPLAIN_TO_PATH if set.

- A schema dump is no longer required for meta-data because we just assume that you are using a SQLite DEBUG build that tells us everything we want to know about in the ‘comment’ column.

- grokexplain.py now uses optparse and has more internal documentation and such.

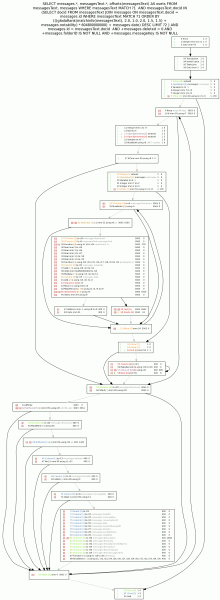

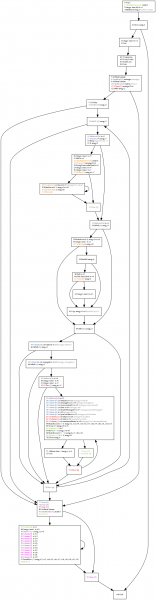

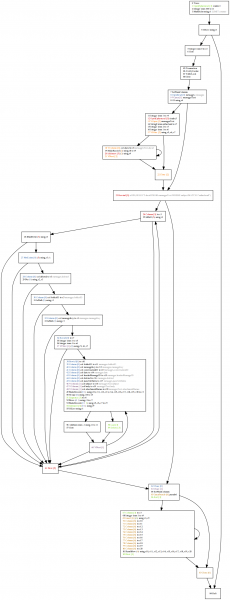

So what do the pretty pictures show?

- Before: A gloda fulltext query search retrieves all of the results data before applying the LIMIT. This results in a lot more data transplanted into temporary results tables than we will end up using; wasted bookkeeping. Additionally, we incur the row lookup costs for both our messages data storage table and our fulltext messagesText table for all hits, even the ones we will not return. (Noting that there was no escaping hitting both tables since we were using offsets() and it hits the fulltext table as part of its operation.)

- After: We perform an initial query phase where we minimize wasted bookkeeping by only retrieving and using the bare minimum required to compute the LIMITed list of document id’s. Additionally, by use of the FTS3 matchinfo() function instead of the offsets() function we are able to avoid performing row lookup on the messagesText table for results that will not be returned to the user. Use of the matchinfo() function requires a custom ranking function which allows us to be somewhat more clever about boosting results based on fulltext matches too.

- The poor man’s bar graphs in the pictures are expressing a hand-rolled logarithmic scale for the number of invocations (left bar) and number of btree pages accessed (right bar). On the right side of each line the actual numbers are also presented in the same order. The goal is not to convey good/bad so much as to draw the eye to hot spots.

Notes for people who want more to read:

- SQLite has built-in infrastructure to track the number of times an opcode is executed as well as the wall-clock time used; you do not have to use systemtap. It’s a conditional compilation kind of thing, just -DVDBE_PROFILE and every statement you execute gets its performance data appended to vdbe_profile.out when you reset the statement. It doesn’t do the btree pages consulted trick, but it’s obviously within its power with some code changes.

- Our use of systemtap is primarily a question of discretionary reporting control, the ability to integrate the analysis across other abstraction layers, and various build concerns. The JSON output is not a driving concern, just something nice we are able to do for ourselves since we are formatting the output.

- The tool has really crossed over into the super-win-payoff category with this fix investigation. (Having said that, I probably could have skipped some of the data-flow stuff last time around. But these projects are both for personal interest and amusement as well as practical benefit, so what are you gonna do? Also, that effort could pay off a bit more by propagating comments along register uses so that the LIMIT counter register r8 and the compute-once r7 in the after diagram would not require human thought.)

References:

- The grokexplain repo. Used like so: python grokexplain.py –vdbe-stats=/tmp/glodaNewSearchPerf.json /tmp/glodaNewSearchExplained.json -o /tmp/glodasearch-newcheck

- The systemtap script in its repo. Used like so: sudo stap -DMAXSTRINGLEN=1024 sqlite-perf.stp /path/to/thunderbird-objdir/mozilla/dist/lib/libsqlite3.so > /tmp/glodaNewSearchPerf.json

- The bug with the gloda explain logic patch and the improved SQL query logic. I also used the tooling to fix another (now-fixed) bug, but that problem turned out to be less interesting.