Asynchronous JS can be unwieldy and confusing. Specifically, callbacks can be unwieldy, especially when you introduce error handling and start chaining asynchronous operations. So, people frequently turn to something like Python’s Twisted‘s deferreds which provide for explicit error handling and the ability for ‘callbacks’ to return yet another asynchronous operation.

In CommonJS-land, there are proposals for deferred-ish promises. In a dangerously concise nutshell, these are:

- Promises/A: promises have a then(callback, errback) method.

- Promises/B: the promises module has a when(value, callback, errback) helper function.

I am in the Promises/B camp because the when construct lets you not care whether value is actually a promise or not both now and in the future. The bad news about Promises/B is that:

- It is currently not duck typable (but there is a mailing list proposal to support unification that I am all for) and so really only works if you have exactly one promises module in your application.

- The implementation will make your brain implode-then-explode because it is architected for safety and to support transparent remoting.

To elaborate on the (elegant) complexity, it uses a message-passing idiom where you send your “when” request to the promise which is then responsible for actually executing your callback or error back. So if value is actually a value, it just invokes your callback on the value. If value was a promise, it queues your callback until the promise is resolved. If value was a rejection, it invokes your rejection handler. When a callback returns a new promise, any “when”s that were targeted at the associated promise end up retargeted to the newly returned promise. The bad debugging news is that almost every message-transmission step is forward()ed into a subsequent turn of the event loop which results in debuggers losing a lot of context. (Although anything that maintains linkages between the code that created a timer and the fired timer event or other causal chaining at least has a fighting chance.)

In short, promises make things more manageable, but they don’t really make things less confusing, at least not without a little help. Some time ago I created a modified version of Kris Kowal‘s Q library implementation that:

- Allows you to describe what a promise actually represents using human words.

- Tracks relationships between promises (or allows you to describe them) so that you can know all of the promises that a given promise depends/depended on.

- Completely abandons the security/safety stuff that kept promises isolated.

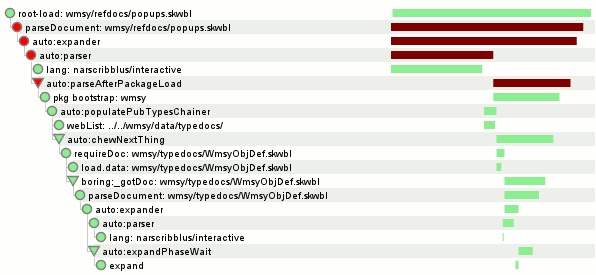

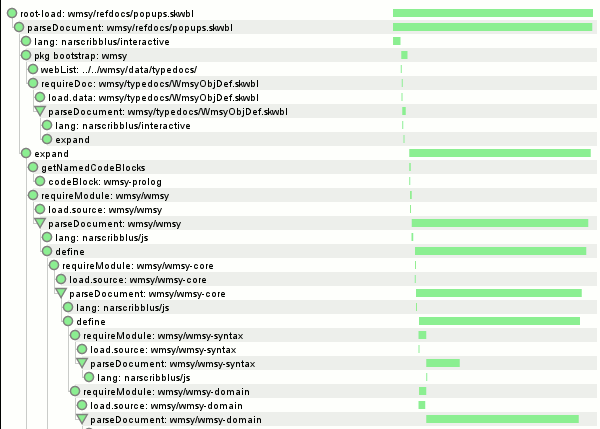

The end goal was to support debugging/understanding of code that uses promises by converting that data into something usable like a visualization. I’ve done this now, applying it to jstut’s (soon-to-be-formerly narscribblus’) load process to help understand what work is actually being done. If you are somehow using jstut trunk, you can invoke document.jstutVisualizeDocLoad(/* show boring? */ false) from your JS console and see such a graph in all its majesty for your currently loaded document.

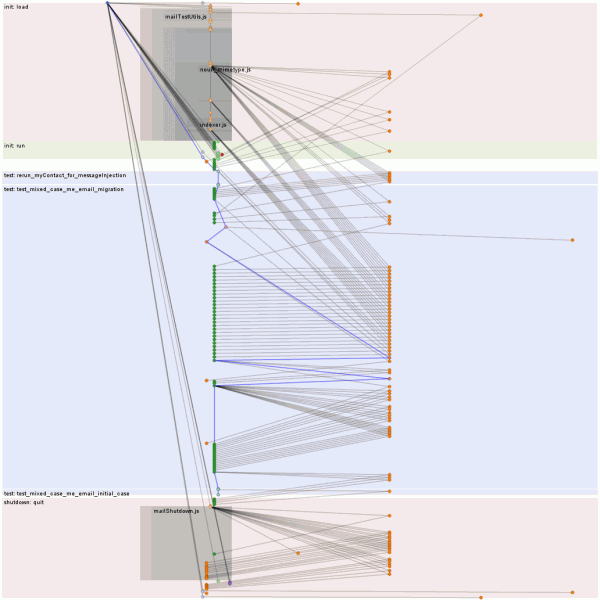

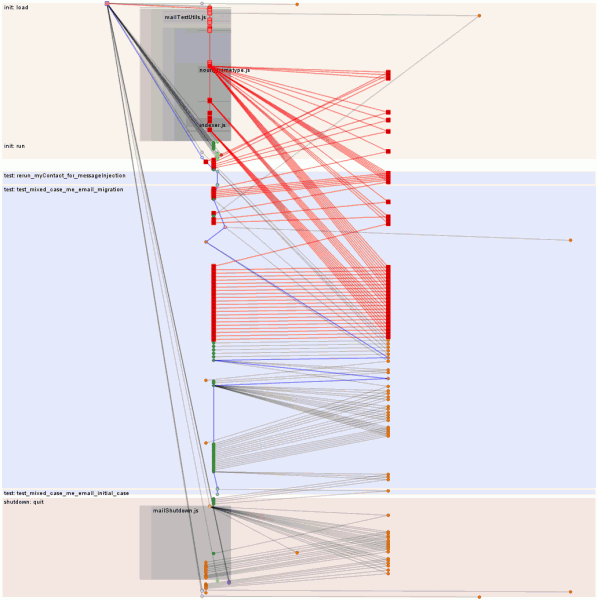

The first screenshot (show boring = true) is of a case where a parse failure of the root document occurred and we display a friendly parse error screen. The second screenshot (show boring = false) is the top bit of the successful presentation of the same document where I have not arbitrarily deleted a syntactically important line.

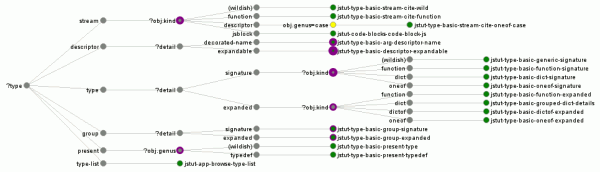

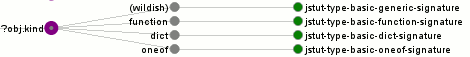

A basic description of the visualization:

- It’s a hierarchical protovis indented tree. The children of a node are the promises it depended on. A promise that depended in parallel(-ish) on multiple promises will have multiple children. The special case is that if we had a “when” W depending on promise X, and X was resolved with promise Y, then W gets retargeted to Y. This is represented in the visualization as W having children X and Y, but with Y having a triangle icon instead of a circle in order to differentiate from W having depended on X and Y in parallel from the get-go.

- The poor man’s timeline on the right-hand side shows the time-span between when the promise was created and when it was resolved. It is not showing how long the callback function took to run, although it will fall strictly within the shown time-span. Time-bar widths are lower bounded at 1 pixel, so the duration of something 1-pixel wide is not representative of anything other than position.

- Nodes are green if they were resolved, yellow if they were never resolved, red if they were rejected. Nodes are gray if the node and its dependencies were already shown elsewhere in the graph; dependencies are not shown in such a case. This reduces redundancy in the visualization while still expressing actual dependencies.

- Timelines are green if the promise was resolved, maroon if it was never resolved or rejected. If the timeline is never resolved, it goes all the way to the right edge.

- Boring nodes are elided when so configured; their interesting children spliced in in their place. A node is boring if its “what” description starts with “auto:” or “boring:”. The when() logic automatically annotates an “auto:functionName” if the callback function has a name.

You can find pwomise.js and pwomise-vis.js in the narscribblus/jstut repo. It’s called pwomise not to be adorable but rather to make it clear that it’s not promise.js. I have added various comments to pwomise.js that may aid in understanding. Sometime soon I will update my demo setup on clicky.visophyte.org so that all can partake.