Recap from step 1: Sometimes unit test failures on the mozilla tinderboxen are (allegedly, per me) due to insufficiently constrained asynchronous processes. Sometimes the tests time out because of the asynchronous ordering thing, sometimes it’s just because they’re slow. Systemtap is awesome. Using systemtap we can get exciting debug output in JSON form which we can use to fight the aforementioned things.

Advances in bullet point technology have given us the following new features:

- Integration of latencytap by William Cohen. Latencytap is a sytemtap script that figures out why your thread/process started blocking. It uses kernel probes to notice when the task gets activated/deactivated which tells us how long it was asleep. It performs a kernel backtrace and uses a reasonably extensive built-in knowledge base to figure out the best explanation for why it decided to block. This gets us not only fsync() but memory allocation internals and other neat stuff too.

- We ignore everything less than a microsecond because that’s what latencytap already did by virtue of dealing in microseconds and it seems like a good idea. (We use nanoseconds, though, so we will filter out slightly more because it’s not just quantization-derived.)

- We get JS backtraces where available for anything longer than 0.1 ms.

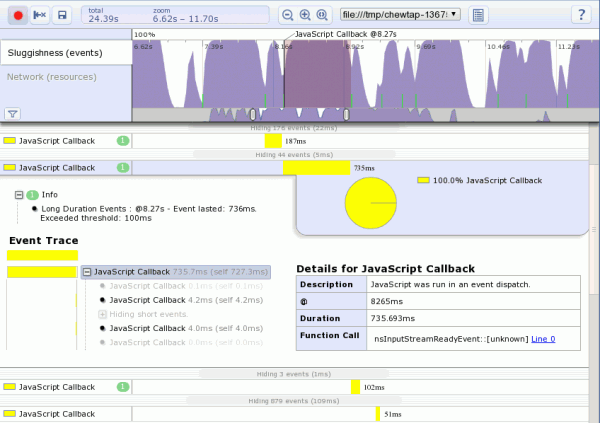

- The visualization is now based off of wall time by default.

- Visualization of the latency and GC activities on the visualization in the UI.

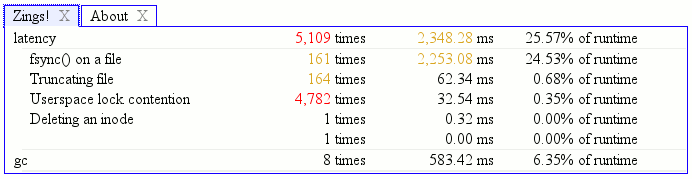

- Automated summarization of latency including aggregation of JS call stacks.

- The new UI bits drove and benefit from various wmsy improvements and cleanup. Many thanks to my co-worker James Burke for helping me with a number of design decisions there.

- The systemtap probe compilation non-determinism bug mentioned last time is not gone yet, but thanks to the friendly and responsive systemtap developers, it will be gone soon!

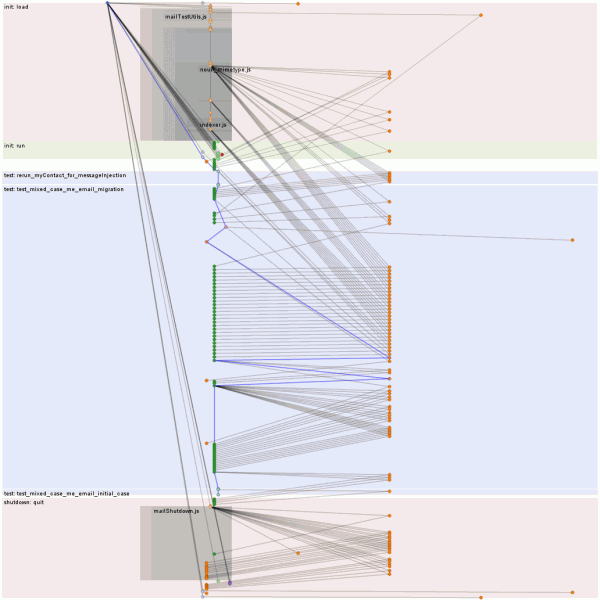

Using these new and improved bullet points we were able to look at one of the tests that seemed to be intermittently timing out (the bug) for legitimate reasons of slowness. And recently, not just that one test, but many of its friends using the same test infrastructure (asyncTestUtils).

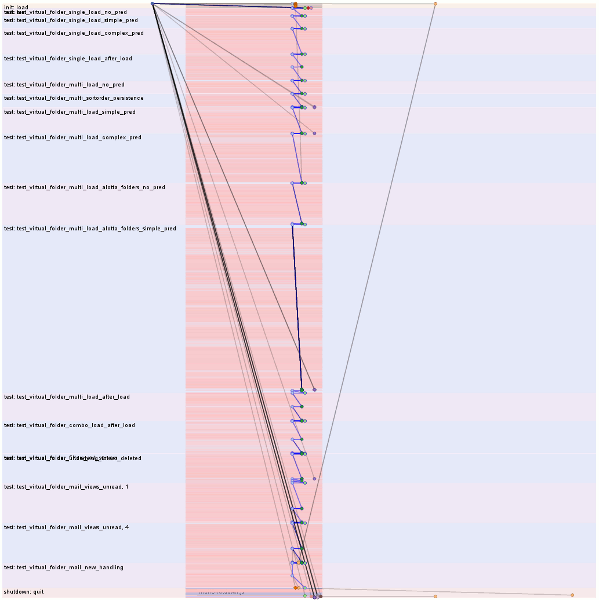

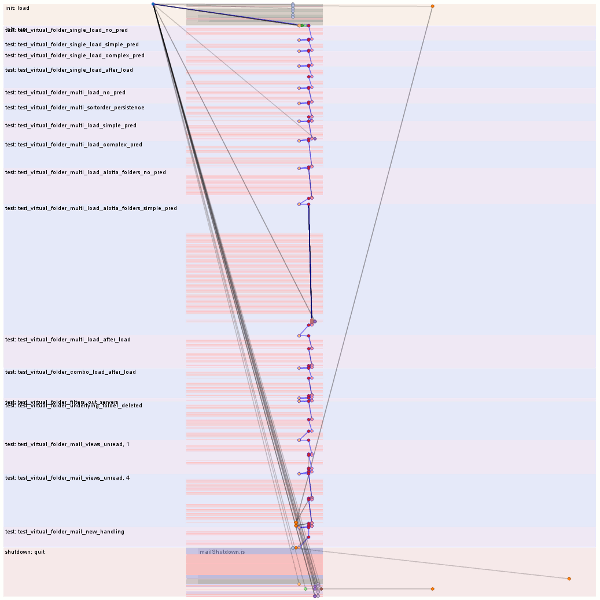

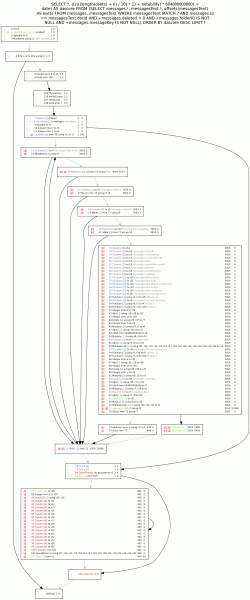

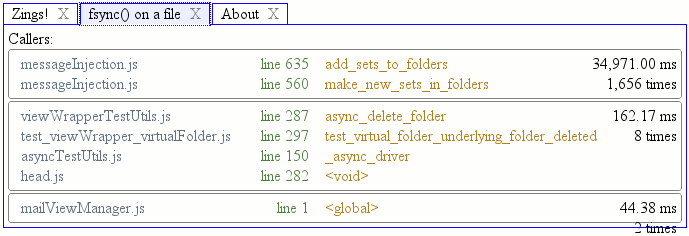

So if you look at the top visualization, you will see lots of reds and pinks; it’s like a trip to Arizona but without all of the tour buses. Those are all our fsyncs. How many fsyncs? This many fsyncs:

Why so many fsyncs?

Oh dear, someone must have snuck into messageInjection.js when I was not looking! (Note: comment made for comedic purposes; all the blame is mine, although I have several high quality excuses up my sleeve if required.)

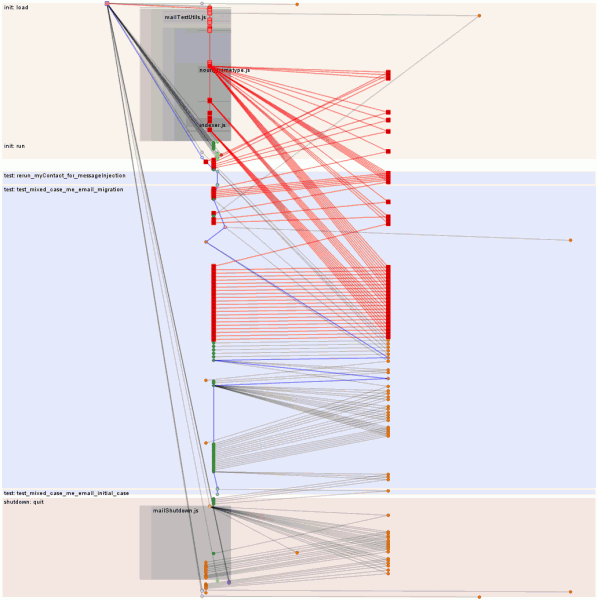

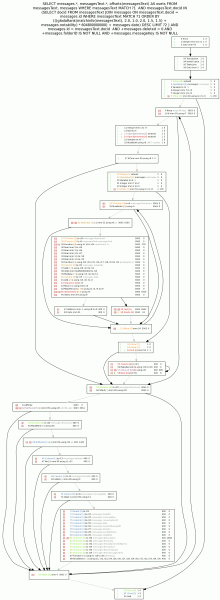

What would happen if we change the underlying C++ class to support batching semantics and the injection logic to use it?

Nice.

NB: No, I don’t know exactly what the lock contention is. The label might be misleading since it is based on sys_futex/do_futex being on the stack rather than the syscall. Since they only show up on one thread but the latencytap kernel probes need to self-filter because they fire for everything and are using globals to filter, I would not be surprised if it turned out that the systemtap probes used futexes and that’s what’s going on. It’s not trivial to find out because the latencytap probes can’t really get a good native userspace backtrace (the bug) and when I dabbled in that area I managed to hard lock my system and I really dislike rebooting. So a mystery they will stay. Unless someone tells me or I read more of the systemtap source or implement hover-brushing in the visualization or otherwise figure things out.

There is probably more to come, including me running the probes against a bunch of mozilla-central/comm-central tests and putting the results up in interactive web-app form (with the distilled JSON available). It sounds like I might get access to a MoCo VM to facilitate that, which would be nice.