bsmedberg‘s dxrpy likes to generate documentation using dehydra (bsmedberg’s blog posts on this: 1 2). I have added support for references/referencedBy extraction and otherwise wailed on it a little bit, and run this against JUST comm-central’s mailnews/ directory. The collection process is still strictly serial, and there’s a lot of stuff in mozilla-central/comm-central, which is why the subset. You can find the outputs here. My repository with my changes is here. There are all kinds of issues and limitations, of course.

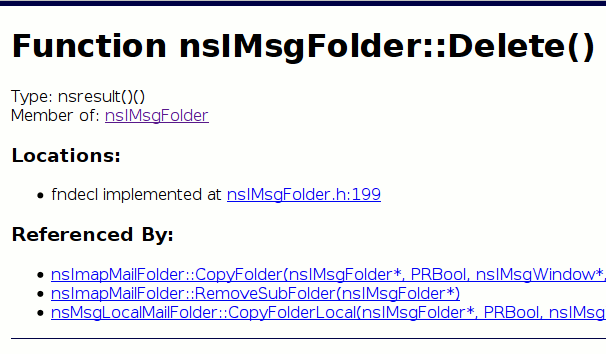

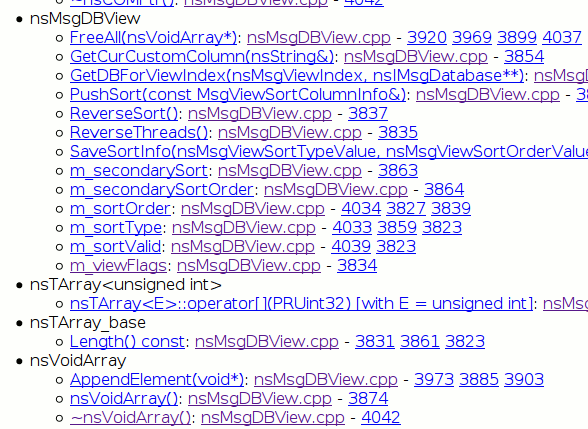

The picture above is of nsIMsgFolder::Delete(), the major advantage over mxr being that we’re not going to have ambiguity from completely unrelated Delete methods. Note that in the mxr link I am cheating by not filtering to mailnews/, but the point is that with dxrpy you don’t need to do that. The picture below shows a slice of the ‘references’ information for nsMsgDBView::Sort. Another thing you could see is who is using nsVoidArray (in mailnews/!) by virtue of referencing its destructor.

My interest in this is a continuation of my efforts on pecobro to provide an interface to expose performance information from various means of instrumentation. That such a thing requires a useful interface substrate is just a nice surprise. The dehydra C++ stuff complements the references/referenced by work I did for javascript in pecobro. Eventually I would hope to use the jshydra infrastructure to accomplish the js stuff since the parser pecobro uses is an unholy/brittle thing, and a usable jshydra would have all kinds of other exciting uses.

Things I would be very happy for other people to magically do so I don’t need to do them:

- Magic up bespin so that it can pull files out of hg.mozilla.org. One of the significant reasons I did not do more work on pecobro is that the approach of syntax highlighting in DOM explodes quite badly when confronted with source files you might find in mozilla-central/comm-central.

- Make dxrpy generate json files representing the information it knows about each source file (positions => semantic info) as well as each function (positions in a function of references=> semantic info on those references).

- Make bespin retrieve those json files when the file is loaded so that its naive syntax highlighting can be augmented to correlate with that semantic info. One could attempt to be clever and use simple regexes or something to allow this information to be maintained while you edit the file, but I only need bespin to be read-only.

- Make it easy for bespin to use that info to follow references and return without sucking.

- Make something that extracts stack-traces from socorro and clusters this information by class, sticking it in static json files too, why not.

- Make bespin use its semantic and just general line number info to grab the stack-trace crash info so that it can highlight code in bespin that is suspected to be crashy. (You would probably want to get clever about determining what is a potentially innocent frame in the trace and what should be considered actually crasy. Knowing when you’re calling out of your module is probably a simple and useful heuristic.)

- Make something that chews the crash stacks with the dehydra/dxrpy data to make a visualization of where the crasy stuff is happening, module-wise.)

- Make bespin retrieve json files of file/class-oriented json files that my performance tooling generates, letting me display cool sparkline/sparkbar things in the source.

- Cleanup/infrastructurization of dxrpy’s generation/display mechanisms. My greatest need of dxrpy is actually a coherent framework that can easily integrate with bespin. The tricky part for my performance work is the javascript side of the equation, and the pecobro tooling actually does pretty well there. It just wants to have a migration path and an interface that doesn’t cause firefox to lock up every time I change syntax-highlighted documents…

And if anyone already has a tool that is basically a statistical profiler that can do stack-traces that fully understand the interleaved nature of the C++ and spidermonkey JS stacks, that would be great. (Bonus points for working with the JIT enabled!) My dream would be a timer-driven VProbe based solution; then I could run things against a replay and avoid distortion of the timescale while also allowing for a realistic user experience in generating the trace. I would like to avoid having to do trace reconstruction as I have done most recently with dtrace and chronicle-recorder. I would not be opposed to a PythonGDB solution, especially if VMware 6.5 still provides a gdb remote thinger (did the eclipse UI make that disappear?), although that would probably still be more crossings than I would like and perhaps slower (if less distorting) than an in-process or ptrace based solution.