HTML and CSS provide awesome layout potential, but harnessing that potential to do your bidding can sometimes be problematic. I suspect all people who have written HTML documents have been in a situation where they have randomly permuted the document structure and CSS of something that should work in the hopes of evolving it into something that actually does work. Matching wits with a black box that is invulnerable to even pirate-grade profanity is generally not a pleasant experience.

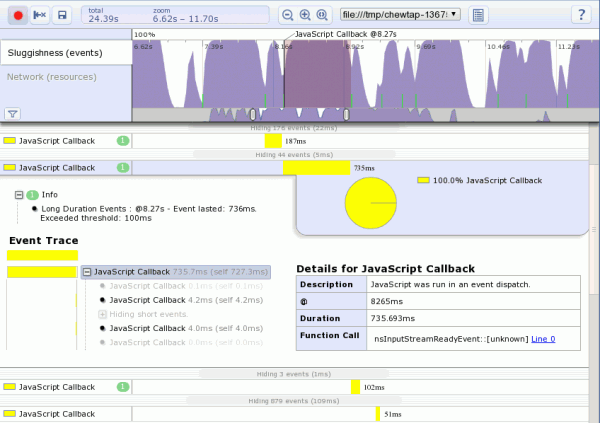

It turns out gecko has a way to let you see inside that black box. Well, actually, multiple ways. There’s a layout debugger (that seems broken on trunk?) that can display visual overlays for box sizes/events/reflow counts, dump info about paints/invalidations/events as they happen as well as dumping the current content tree and frames. Even better, gecko’s frame reflow debugging mechanism will dump most of the inputs and outputs of each stage of reflow calculations as they happen. With some comparatively minor patches[1] we can augment this information so that we can isolate reflow decisions to their origin presentation shell/associated URL and so that we know the tag name, element id, and class information on HTML nodes subjected to reflow calculations. A reasonably sane person would want to do this if they were planning to be doing a lot of potentially complicated HTML layout work and would a) benefit from better understanding how layout actually works, b) not want to propagate layout cargo culting or its ilk from the code being replaced, c) not want to waste days of their lives later the next time this happens, d) help locate and fix layout bugs if bugs they be so that all might benefit.

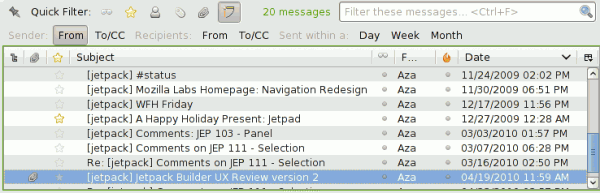

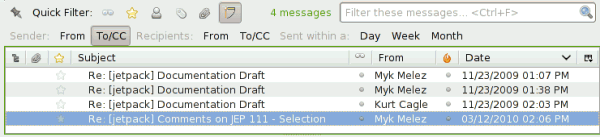

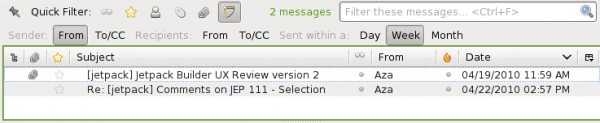

Of course, with logs that effectively amount to execution traces, examining them by hand is frequently intractable unless you really know what you’re looking for or are dealing with a toy example. My non-reduced problem case resulted in 58,107 lines, for one. So writing a tool is a good idea, and writing it in JS using Jetpack doubly so.

In any event, the problem is I am trying to use the flexible box model to create an area of the screen that takes up as much space as possible. In this space I want to be able to house a virtual scrolling widget so I use “overflow: hidden”. Regrettably, when my logic goes to populate the div, the box ends up resizing itself and now the whole page wants to scroll. Very sad. (Things work out okay with an explicitly sized box which is why my unit tests for the virtual scrolling widget pass…)

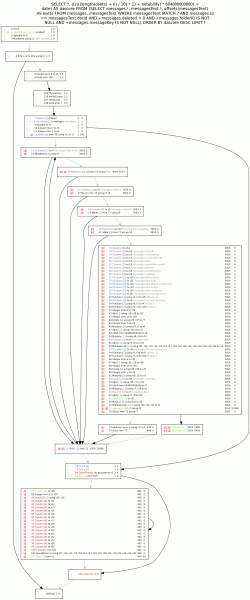

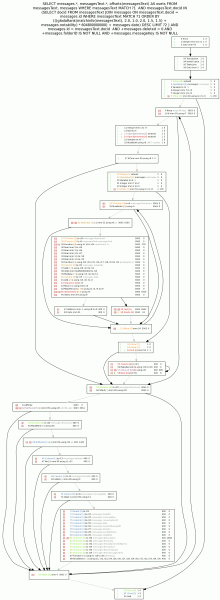

Let’s formulate a query on the div of interest (which I will conceal) and then see what the first little bit of output is:

*** Box 24 tag:div id: classes:default-bugzilla-ui-bug-page-runBox

*** scroll 25 tag:div id: classes:default-bugzilla-ui-bug-page-runs

scroll 25 variant 1 (parent 24) first line 406

why: GetPrefSize

inputs: boxAvail: 0,UC

boxLast: 0,0

reflowAvailable: 0,UC

reflowComputed: 0,UC

reflowExtra: dirty v-resize

output: prefWidth: 0

minWidth: 0

reflowDims: 0,0

prefSize: 0,0

minSize: 0,0

maxSize: UC,UC

parent concluded: minSize: 0,0

maxSize: UC,UC

prefSize: 2,0

scroll 25 variant 2 (parent 24) first line 406

why: Layout

inputs: boxAvail: 771,1684

boxLast: 0,0

reflowAvailable: 771,UC

reflowComputed: 771,1684

reflowExtra: dirty dirty-children h-resize v-resize

output: prefSize: 0,0

minSize: 0,0

maxSize: UC,UC

reflowDims: 771,1684

layout: 2,0,771,1684

parent concluded: minSize: 0,0

maxSize: UC,UC

prefSize: 0,0

layout: 0,0,773,1684

This is the general pattern we will see to the reflows. The parent will ask it what size it wants to be and it will usually respond with “ridiculously wide but not so tall”. (Not in this first base case, but the next one responds with a prefsize of “1960,449”, and that’s pixels.) The parent will then perform layout and say “no, you need to be taller than you want to be”, at least until I start cramming stuff in there.

So we skim down the output to find out where things first went off the rails…

scroll 25 variant 16 (parent 24) first line 20548

why: GetPrefSize

inputs: boxAvail: 1960,UC

boxLast: 771,1686

reflowAvailable: 1960,UC

reflowComputed: 1960,UC

reflowExtra: dirty-children h-resize v-resize

output: prefWidth: 1960

minWidth: 352

reflowDims: 1960,1755

prefSize: 1960,1755

minSize: 352,1755

maxSize: UC,UC

parent concluded: minSize: 0,0

maxSize: UC,UC

prefSize: 1962,1755

scroll 25 variant 17 (parent 24) first line 20548

why: Layout

inputs: boxAvail: 771,1755

boxLast: 1960,1755

reflowAvailable: 771,UC

reflowComputed: 771,1755

reflowExtra: dirty-children h-resize

output: prefSize: 0,0

minSize: 352,1755

maxSize: UC,UC

reflowDims: 771,1755

layout: 2,0,771,1755

parent concluded: minSize: 0,0

maxSize: UC,UC

prefSize: 0,0

layout: 0,0,773,1755

Okay, that looks pretty likely to be the area of concern. The parent asked it for its ideal size, so it told it, but then the parent apparently decided to enlarge itself too. That is not what we wanted. We would have been cool if just the scroll #25 enlarged itself (or its block child #26 that corresponds to the same content node but which I have elided because it always says the same thing as its parent #25) since some frame needs to end up holding the overflow coordinate space.

Thus concludes part 1 of our exciting saga. In part 2, we hopefully figure out what the problem is and how to fix it. Lest anyone suggest the root problem is that I am completely off base and am not remotely reasonably sane for choosing this as a strategy to solve the problem… it works in chrome. Which is not to say that my html/css is correct and firefox’s layout is wrong; it’s quite possible for layout engines to err or deal with unspecified behaviour cases in my favor, after all. But it does make me want to understand what the layout engine is thinking and be able to do so with a minimum of effort in the future, since I doubt this is the last time I will not immediately understand the problem or that layout engines will differ in their behaviour.

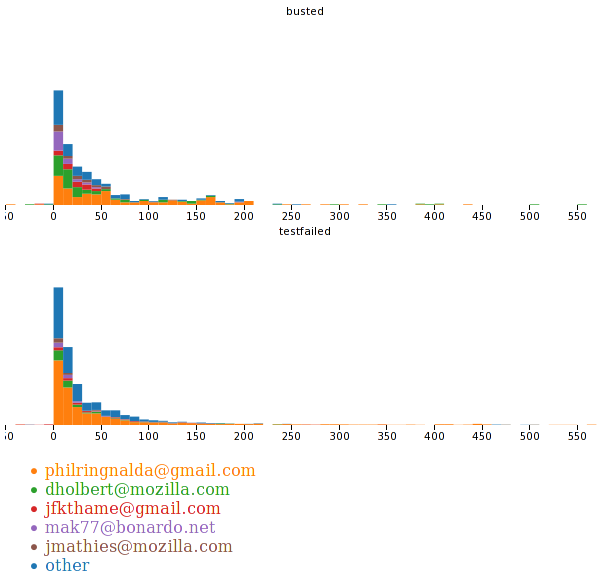

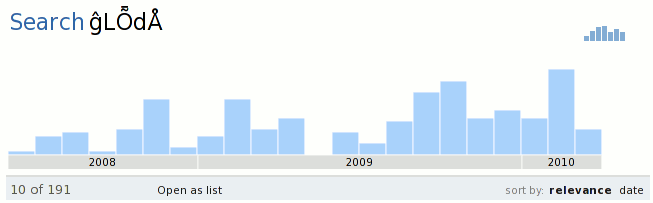

For those who want to play along at home: the raw gzip’d /tmp/framedebug file (gunzip to /tmp/framedebug) that is the entirety of the trunk firefox log with debug output, the spliced output just for the one window derived from an invocation of “cfx run splice” (it will show up under the URL in /tmp/framedumps), and the output of the output of “cfx run summarize serial-12-0 summarize unique 22,24,25,26. Those unique identifiers are deterministic but arbitrary values for the given file. We discovered them by using the query on the CSS class using “cfx run summarize serial-12-0 summarize class wlib-wlib-virt-wlib-virt-container-root”. The hg repo for the processing tool is here, the mozilla-central patches are: first and second. A minor jetpack patch is also required for the command line stuff to work.

1: I was initially trying to avoid patching anything. This didn’t work out, but it did cause the initial log file splicing logic to leverage the arena allocation scheme of presentation shells to allow us to to map frames back to their URLs. Sadly, it turned out the arena allocation blocks requested from the upstream allocators are really small (4k or 1k) and all from the same source and so I had to instrument the allocation as well as adding debug output of the window/docshell/presshell linkages. The output adds an unacceptable additional level of noise to the default DEBUG case; the right thing to do is likely to cause the reflow log debugging to emit the document URL before each logging outburst if it is different from the last outburst.