The Problem

One of my focuses at MoMo is to improve the plight of Thunderbird extension developers. An important aspect of this is improving the platform they are exposed to. Any platform usually entails a fair amount of complexity. The trick is that you only pay for the things that are new-to-you, learning-wise.

The ‘web’ as a platform is not particularly simple; it’s got a lot of pieces, some of which are fantastically complex (ex: layout engines). But those bits are frequently orthogonal, can be learned incrementally, have reams of available documentation, extensive tools that can aid in understanding, and, most importantly, are already reasonably well known to a comparatively large population. The further you get from the web-become-platform, the more new things you need to learn and the more hand-holding you need if you’re not going to just read the source or trial-and-error your way through. (Not that those are bad ways to roll; but not a lot of people make it all the way through those gauntlets.)

I am working to make Thunderbird more extensible in more than a replace-some-function/widget-and-hope-no-other-extensions-had-similar-ideas sort of way. I am also working to make Thunderbird and its extensions more scalable and performant without requiring a lot of engineering work on the part of every extension. This entails new mini-platforms and non-trivial new things to learn.

There is, of course, no point in building a spaceship if no one is going to fly it into space and fight space pirates. Which is to say, the training program for astronauts with all its sword-fighting lessons is just as important as the spaceship, and just buying them each a copy of “sword-fighting for dummies who live in the future” won’t cut it.

Translating this into modern-day pre-space-pirate terminology, it would be dumb to make a super fancy extension API if no one uses it. And given that the platform is far enough from pure-web and universally familiar subject domains, a lot of hand-holding is in order. Since there is no pre-existing development community familiar with the framework, they can’t practically be human hands either.

The Requirements

I assert the following things are therefore important for the documentation to be able to do:

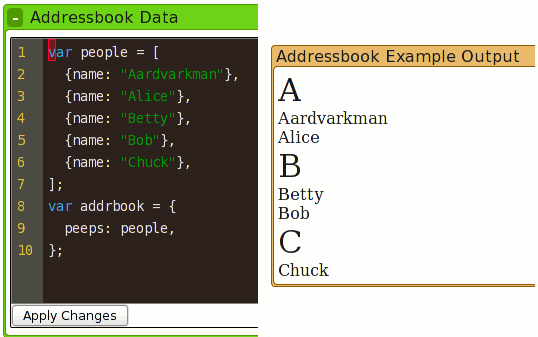

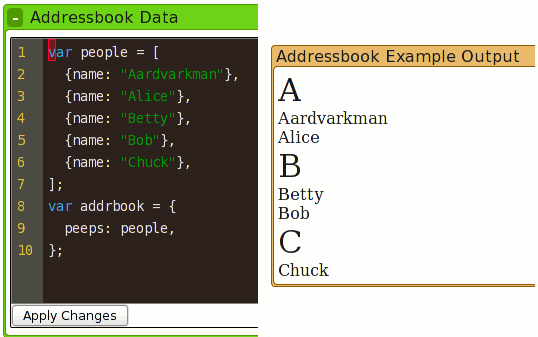

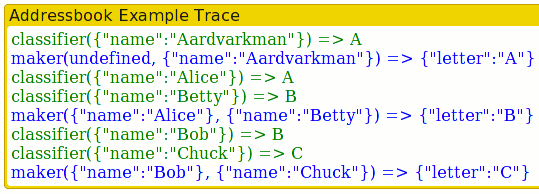

- Start with an explained, working example.

- Let the student modify the example with as little work on their part as possible so that they can improve their mental model of how things actually work.

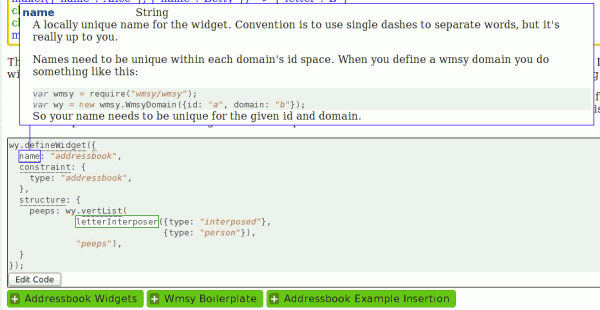

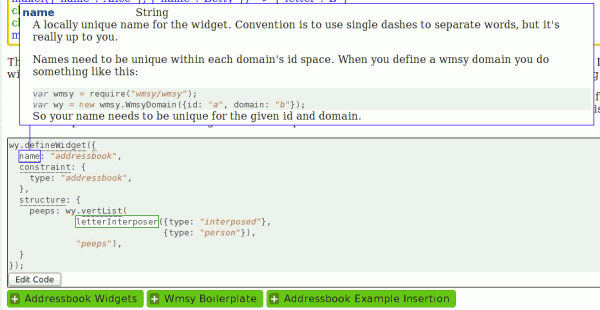

- Link to other relevant documentation that explains what is going on, especially reference/API docs, without the user having to open a browser window and manually go search/cross-reference things for themselves.

- Let the student convert the modified example into something they can then use as the basis for an extension.

The In-Process Solution: Narscribblus

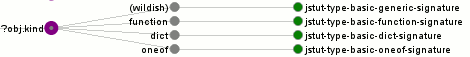

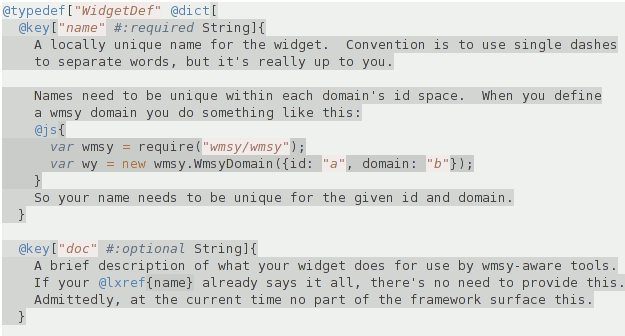

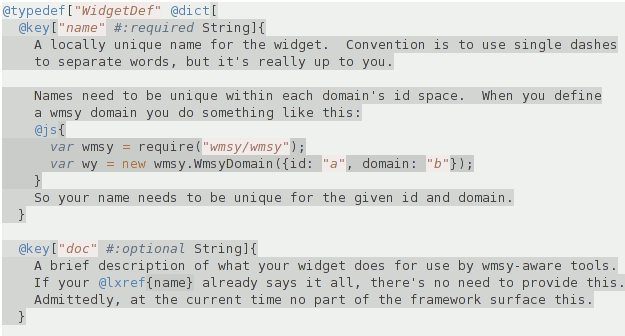

So, I honestly was quite willing to settle for an existing solution that was anywhere close to what I needed. Specifically, the ability to automatically deep-link source code to the most appropriate documentation for the bits on hand. It has become quite common to have JS APIs that take an object where you can have a non-trivial number of keys with very specific semantics, and my new developer friendly(-ish) APIs are no exception.

Unfortunately, most existing JavaScript documentation tools are based on the doxygen/JavaDoc model of markup that:

- Was built for static languages where your types need to be defined. You can then document each component of the type by hanging doc-blocks off them. In contrast, in JS if you have a complex Object/dictionary argument that you want to hang stuff of of, your best bet may be to just create a dummy object/type for documentation purposes. JSDoc and friends do support a somewhat enriched syntax like “@param arg.attr”, but run into the fact that the syntax…

- Is basically ad-hoc with limited extensibility. I’m not talking about the ability to add additional doctags or declare specific regions of markup that should be passed through a plugin, which is pretty common. In this case, I mean that it is very easy to hit a wall in the markup language that you can’t resolve without making an end-run around the existing markup language entirely. As per the previous bullet point, if you want to nest rich type definitions, you can quickly run out of road.

The net result is that it’s hard to even describe the data types you are working with, let alone have tools that are able to infer links into their nested structure.

So what is my solution?

- Steal as much as possible from Racket (formerly PLT Scheme)’s documentation tool, Scribble. To get a quick understanding of the brilliance of Racket and Scribble, check out the quick introduction to racket. For those of you who don’t click through, you are missing out on examples that automatically hyperlink to the documentation for invoked methods, plus pictures capturing the results of the output in the document.

- We steal the syntax insofar as it is possible without implementing a scheme interpreter. The syntax amounts to @command[s-expression stuff where whitespace does not matter]{text stuff which can have more @command stuff in it and whitespace generally does matter}. The brilliance is that everything is executed and there are no heuristics you need to guess at and that fall down.

- Our limitation is that while Racket is a prefix language and can use reader macros and have the entire documents be processed in the same fashion as source code and totally understood by the text editor, such purity is somewhat beyond us. But we do what we can.

- Use narcissus, Brendan Eich/mozilla’s JS meta-circular interpreter thing, to handle parsing JavaScript. Although we don’t have reader macros, we play at having them. If you’ve ever tried to parse JavaScript, you know it’s a nightmare that requires the lexer to be aware of the parsing state thanks to the regexp syntax. So in order for us to be able to parse JavaScript inline without depending on weird escaping syntaxes, when parsing our documents we use narcissus to make sure that we parse JavaScript as JavaScript; we just break out when we hit our closing squiggly brace. No getting tricked by regular expressions, comments, etc.

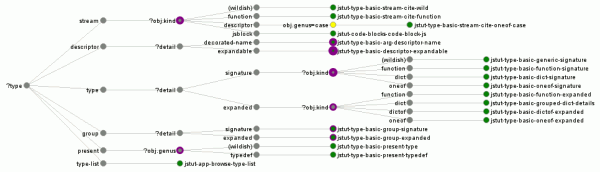

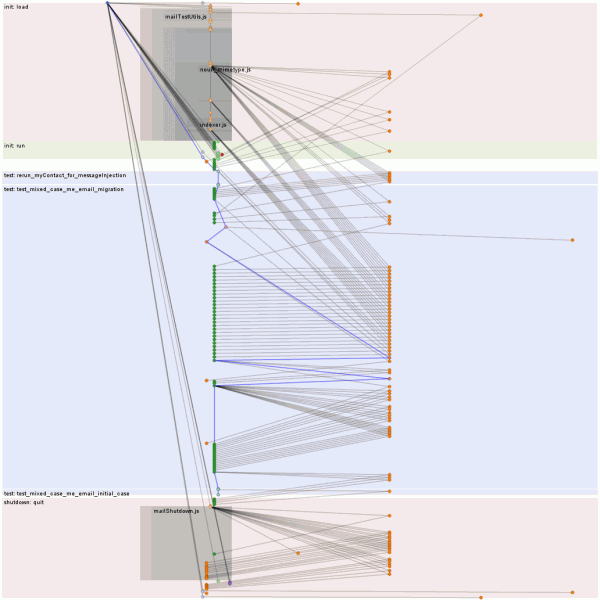

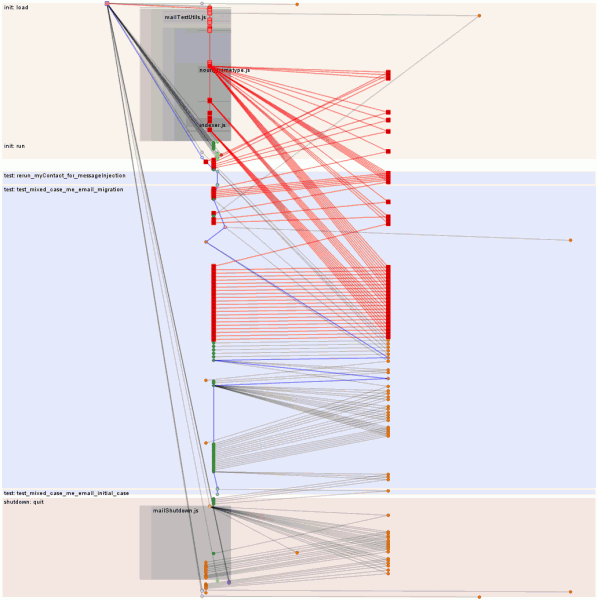

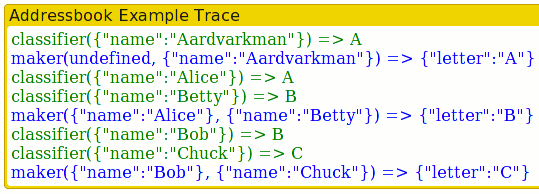

- Use the abstract interpreter logic from Patrick Walton‘s jsctags (and actually stole its CommonJS-ified narcissus as the basis for our hacked up one too) as the basis for abstract interpretation to facilitate being able to linkify all our JavaScript code. The full narcissus stack is basically:

- The narcissus lexer has been modified to optionally generate a log of all tokens it generates for the lowest level of syntax highlighting.

- The narcissus parser has been modified to, when generating a token log, link syntax tokens to their AST parse nodes.

- The abstract interpreter logic has been modified to annotate parse nodes with semantic links so that we can traverse the tokens to be able to say “hey, this is attribute ‘foo’ in an object that is argument index 1 of an invocation of function ‘bar'” where we were able to resolve bar to a documented node somewhere. (We also can infer some object/class organization as a result of the limited abstract interpretation.)

- We do not use any of the fancy static analysis stuff that is going on as of late with the DoctorJS stuff. Automated stuff is sweet and would be nice to hook in, but the goal here is friendly documentation.

- The abstract interpreter has been given an implementation of CommonJS require that causes it to load other source documents and recursively process them (including annotating documentation blocks onto them.)

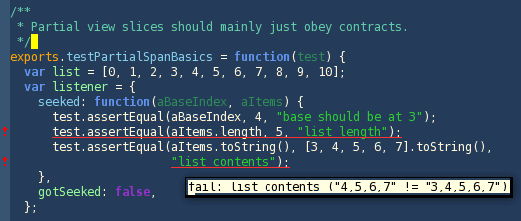

- We use bespin as the text editor to let you interactively edit code and then see the changes. Unfortunately, I did not hook bespin up to the syntaxy magic we get when we are not using bespin. I punted because of CommonJS loader snafus. I did, however, make the ‘apply changes’ button use narcissus to syntax check things (with surprisingly useful error messages in some cases).

Extra brief nutshell facts:

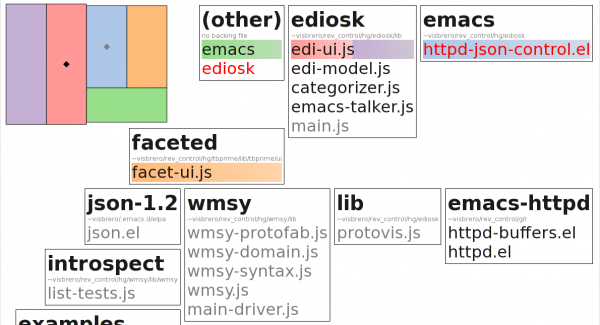

- It’s all CommonJS code. The web enabled version which I link to above and below runs using a slightly modified version of Gozala‘s teleport loader. It can also run privileged under Jetpack, but there are a few unimplemented sharp edges relating to Jetpack loader semantics. (Teleport has been modified mainly to play more like jetpack, especially in cases where its aggressive regexes go looking for jetpack libs that aren’t needed on the web.) For mindshare reasons, I will probably migrate off of teleport for web loading and may consider adding some degree of node.js support. The interactive functionality currently reaches directly into the DOM, so some minor changes would be required for the server model, but that was all anticipated. (And the non-interactive “manual” language already outputs plain HTML documents.)

- The web version uses a loader to generate the page which gets displayed in an iframe inside the page. The jetpack version generates a page and then does horrible things using Atul‘s custom-protocol mechanism to get the page displayed but defying normal browser navigation; it should either move to an encapsulated loader or implement a proper custom protocol.

Anywho, there is still a lot of work that can and will be done (more ‘can’ than ‘will’), but I think I’ve got all the big rocks taken care of and things aren’t just blue sky dreams, so I figure I’d give a brief intro for those who are interested.

Feel free to check out the live example interactive tutorialish thing linked to in some of the images, and its syntax highlighted source. Keep in mind that lots of inefficient XHRs currently happen, so it could take a few seconds for things to happen. The type hierarchy emission and styling still likely has a number of issues including potential failures to popup on clicks. (Oh, and you need to click on the source of the popup to toggle it…)

Here’s a bonus example to look at too, keeping in mind that the first few blocks using the elided js magic have not yet been wrapped in something that provides them with the semantic context to do magic linking. And the narscribblus repo is here.