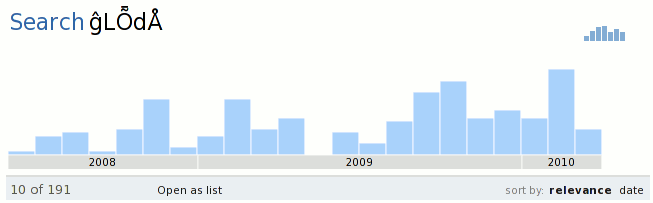

Thanks to the efforts of Makoto Kato (jp-blog en-blog twitter) whom you may remember as the bringer of CJK bi-gram tokenization, I have just pushed tokenizer support for case-folding, accent-folding, and voiced sound mark magic to comm-central. The net result is that searches for “ĝLȬdÅ” will now find “gloda” and vice versa. Less crazy searches should also be improved.

Starting tomorrow, Thunderbird (in the guise of Lanikai) 3.1 nightlies will have this capability and it will also show up in the next beta (beta 2). No action on your part is required; when you start running a build with this capability your existing gloda database will get blown away and indexing will start from scratch. If you go back to an older version for some reason after having used the updated build, the old build will also decide to blow away your database, so try to avoid making a habit of switching between them.

We also have some significant performance enhancements related to gloda search and indexing slated for beta 2, not to mention* usability enhancements to avoid the dreaded gloda search/quick search toggle dance.

* This expression is weird given that I go on to mention the allegedly unmentioned, but it still makes more sense than “all but X”.

What if A and Å are different characters in your alphabet and you _don’t_ want them treated as the same character?

This accent-folding will be nice for French, where accents are optional on capital letters (and for English, where they are often omitted in words where “good” dictionaries mention them, as in resume vs. résumé). I suppose Œ OE Oe oe œ and Æ AE Ae ae æ equivalences will be included too? And what about German ö oe, ß ss, etc.?

@Ernst: Speaking for myself alone, I’d say that in a mailer it’s better to have a little too much data than miss something you need. Ideally (but it seems difficult to achieve in a mailer, where emails have no identified machine-readable language setting) there ought to be a language-dependent setting, so that e.g. (IIUC) in Danish you could have Aa = Å as in Ålborg = Aalborg, and ae = æ in French, English and Danish (and Latin 😉 ) but = ä in German.

Ernst, is that a real example?

Tony, the character-folding is based on the unicode database’s decomposition mapping and case-folding mapping. We map to the first character in the decomposition only, It does not appear that the decomposition mappings cover oe/ae. The lookup table mechanism we use is not capable of expanding a single character into two characters at this time, either.

The solution to all limitations starts with unit tests. Our unit tests in this case consist of a string that is part of the message and then a series of search strings that should (or should not) match the message. Once we have those we can move on to the implementation specifics.

Great. I use the libunac to do that in my software. libunac seems to work with a mapping table. This is interesting if you have a generic solution, that would allow me to remove a dependence. May you post a link to indicate where is this function exactly, that we may have a look?

We have similar bugs for Firefox. Would you like to help fixing these? 🙂

https://bugzilla.mozilla.org/show_bug.cgi?id=202251

Hmm… this seems to be a disagreement I have on accent folding with others on the AMO team. I’d so by and large (tags for example) foö => foo.

But I’m curious to find out more about decomposition maps. I’m also curious what things you did to make search CJK friendly… because that’s another pain-point for AMO.

Yes, the Swedish alphabet ends with UVWXYZÅÄÖ

http://en.wikipedia.org/wiki/Swedish_alphabet

“The letters ‹å›, ‹ä›, and ‹ö› are considered distinct letters in Swedish and are sorted after ‹z› as shown above”

Kelson, I think almost every solution to this problem is based off parsing the unicode database and building some form of mapping. I’m pretty confident libunac numbers among these from my brief survey of how other open source software accomplishes this goal. It is quite possible that libunac does a better job than us; the only guarantee I make is that we do a better job on these things than we did before. (Note: I think libunac may have been disqualified for our use because of license incompatibility, but I do not recall exactly.)

You can find our implementation at http://mxr.mozilla.org/comm-central/source/mailnews/extensions/fts3/

Tomer, our solution is specifically to address full-text search implemented using the SQLite FTS3 full-text search engine. Thunderbird actually continues to have the same problem for our search engine that powers the ‘quick search’, virtual folder/saved search, and ‘advanced search’ modes of operation. If we manage to fix that part of our system, that would do a better job of directly translating to Firefox. (And actually might be the exact same thing if we were to fix our ‘find in message’ implementation.)

Dave, please see the implementation at:

http://mxr.mozilla.org/comm-central/source/mailnews/extensions/fts3/src/fts3_porter.c

I have tried to add copious commenting to explain how things work. In a nutshell, though, CJK is detected and indexed as bi-grams. When a search is made for a string of CJK that is longer than 2 characters, it gets converted into a phrase search based on the overlap. So “ABCD” becomes “AB” overlapping “BC” overlapping “CD”.

Ernst, sorry, I should have been more specific. Does the mapping of those characters to the ASCII characters result in unacceptable levels of false positives? I think we definitely have the problem where a post-tokenized ‘alborg’ is not equal to ‘aalborg’ now. We could likely resolve that by collapsing any observed ‘aa’ to ‘a’. My assumption on the mapping has been that the false positives are outweighed by the elimination of the false negatives.

Andrew: For another example, German ß (eszett or sharp s) uppercases to SS (which is also the uppercase of ss) and de_DE makes the difference between ß and ss in lowercase while de_CH doesn’t (because, it is said, there was no room for ß on Swiss German-and-French QWERTZ typewriter keyboards). Also, the recent spelling change of de_DE had many words’ spelling altered from ß to ss or vice-versa, so some confusion between ß and ss can be tolerated, even expected. It is, however, never acceptable to replace ß by a single s.

Tony, bad news on that front. The case-folding table tells us that “ß” maps to “ss” and our implementation turns that into just “s”. It sounds like we either need specialized mappings for the ss/ae/oe cases or a post-processing pass that collapses them consistently to a single character like it seems like we need for the alborg/aalborg case.

Well, <shrug>next release maybe?</shrug>